Main Second Level Navigation

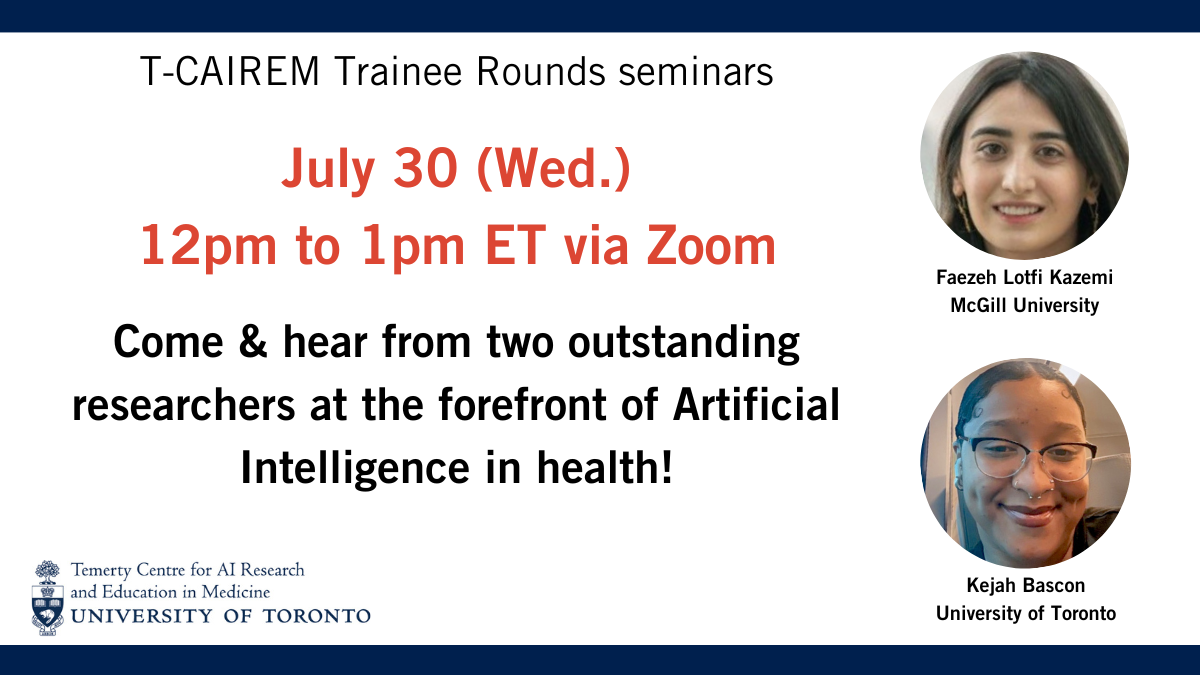

Trainee Rounds: Faezeh Lotfikazemi and Kejah Bascon

DATE: July 30, 2025 (Wed.)

TIME: 12pm to 1pm ET

PRESENTERS: Faezeh Lotfikazemi and Kejah Bascon

Learn more about the presenters

Faezeh Lotfikazemi

Title of Talk

AI-Driven Needle-Free OS-CMR for Classifying Ischemic, Non-Ischemic, and Edema Patterns: A Novel Approach with Pathological Feature Mapping

Description

Cardiovascular disease remains a leading global health concern, necessitating innovative needle-free diagnostic tools. This study explores the integration of oxygenation-sensitive cardiovascular magnetic resonance (OS-CMR) imaging with deep learning to classify myocardial scars across four classes: ischemic (42 cases), non-ischemic (33 cases), edema (47cases), and healthy (68 cases). Leveraging transfer learning with a VGG19-based model, OS-CMR images were resized, preprocessed, and trained to achieve robust classification (Figure 2,3). The model demonstrated high test accuracy (91.0%), precision (91.5%), and recall (74.0%). Multi-class ROC-AUC analysis yielded outstanding scores, including 0.91 (healthy), 0.86 (ischemic), 0.88 (non-ischemic), and 0.97 (inflammation/edema) (Figure 4, 5). Novel Feature map visualizations revealed strong alignment with LGE, T1, and T2 maps, highlighting pathological patterns and validating the model's localization capabilities (Figire6). These findings underscore the feasibility of AI-enhanced OS-CMR as a needle-free, contrast-free alternative for myocardial scar and edema detection, paving the way for safer and more accessible cardiac diagnostics in clinical practice.

Kejah Bascon

Title of Talk

AI Amplified Health Equity: Investigation of Individualized Patient Education Materials on Comprehension, Satisfaction, and Trust

Description

Informed consent in healthcare ensures that patients fully understand their medical procedures before making decisions. However, comprehension barriers such as health literacy challenges, language differences, and medical jargon can impede understanding. Research shows that integrating visual aids improves retention; only 14% of information is remembered from text alone, compared to 80% when images are included.

This project explores the use of Extended Reality (XR), including virtual reality (VR), augmented reality (AR), and mixed reality (MR), to enhance informed consent. By integrating personalized text, visuals, and interactivity tailored to a patient’s education level, language, age, gender, and ethnicity, the project aims to improve patient understanding and satisfaction during pre-surgery consultations.